The project will be due at project presentations to be held Wednesday, December 13, at 3 PM EST.

Overview

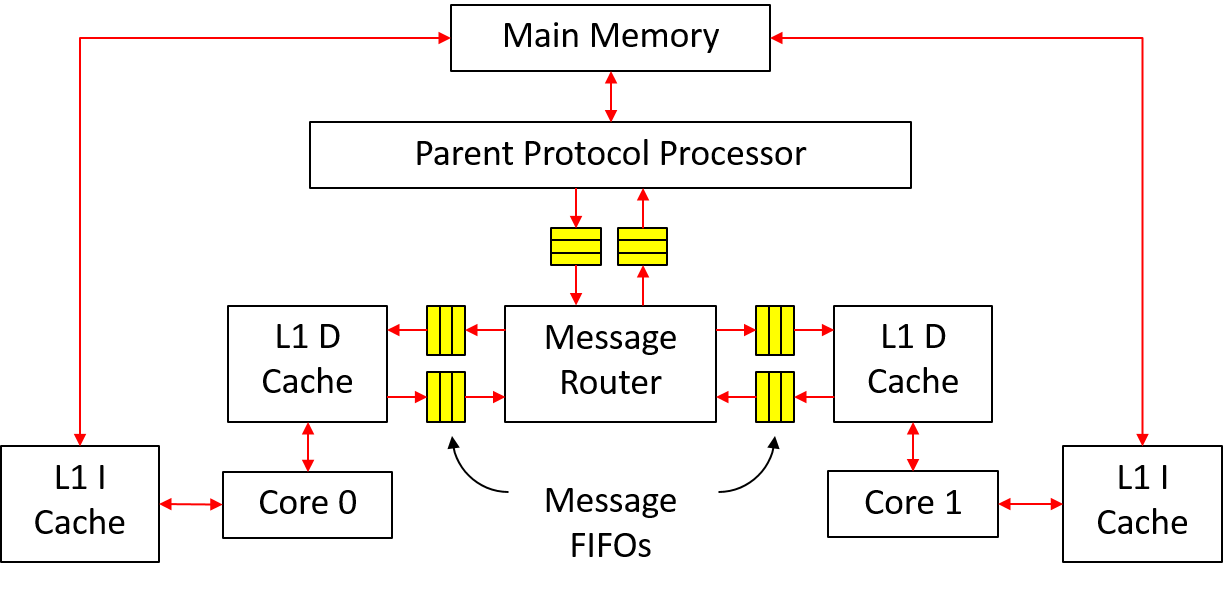

In this part of the project, we will implement a multicore system like the one shown in Figure 1 in simulation. The system consists of two cores (for developement, but should be parametrized to be compilable with 4 cores), and each core has its own private caches. The data caches (D caches) and main memory are kept coherent using the MSI protocol introduced in class. Since we don't have self-modifying programs, the instruction caches (I caches) can directly access the memory without going through any coherent transactions.

|

| Figure 1: Multicore system |

Since this system is quite complex, we have tried to divide the implementation into multiple small steps, and we have provided testbenches for each step. However, passing the testbenches does not imply that the implementation is 100% correct.

Infrastructure

In this project you are working with someone else. The first thing to do for your group is to set up the infrastructure: one of you should create an MIT github repository that is a fork of this repository. The two of you should have commit rights to this new repo. Let me know by email/piazza if your group need help for that. If one of you know how to do that, but not the other one, it is a great day to do a transfer of knowledge and teach the other one!

You should then give me read rights (and write rights if you want), to this repo (for me to be able to grade it, or help you if you need help). But it should not be entirely public (to avoid the other groups looking at it).

A good rule to work in group is to always push what you are working on, and to always pull before starting working on anything and to never keep things locally for too long. This should avoid the majority of merge conflict problems. You should also avoid changing everything overnight without telling the other one.

Implementing units of the memory hierarchy

Message FIFO

The message FIFO transfers both request and response messages. For a message FIFO from a child to the parent, it transfers upgrade requests and downgrade responses. For a message FIFO from the parent to a child, it transfers downgrade requests and upgrade responses.

The message types transferred by the message FIFO is defined in src/includes/CacheTypes.bsv as follow:

typedef struct {

CoreID child;

Addr addr;

MSI state;

Maybe#(CacheLine) data;

} CacheMemResp deriving(Eq, Bits, FShow);

typedef struct {

CoreID child;

Addr addr;

MSI state;

} CacheMemReq deriving(Eq, Bits, FShow);

typedef union tagged {

CacheMemReq Req;

CacheMemResp Resp;

} CacheMemMessage deriving(Eq, Bits, FShow);CacheMemResp is the type for both downgrade responses from a child to the parent, and upgrade responses from the parent to a child. The first field child is the ID of the D cache involved in the message passing. The type CoreID is defined in Types.bsv. The third field state is the MSI state that the child has downgraded to for a downgrade response, or the MSI state that the child will be able to upgrade to for a upgrade response.

CacheMemReq is the type for both upgrade requests from a child to the parent, and downgrade requests from the parent to a child. The third field state is the MSI state that the child wants to upgrade to for an upgrade request, or the MSI state that the child should be downgraded to for a downgrade request.

The interface of message FIFO is also defined in CacheTypes.bsv:

interface MessageFifo#(numeric type n);

method Action enq_resp(CacheMemResp d);

method Action enq_req(CacheMemReq d);

method Bool hasResp;

method Bool hasReq;

method Bool notEmpty;

method CacheMemMessage first;

method Action deq;

endinterfaceThe interface has two enqueue methods (enq_resp and enq_req), one for requests and the other for responses. The boolean flags hasResp and hasReq indicate whether is any response or request in the FIFO respectively. The notEmpty flag is simply the OR of hasResp and hasReq. The interface only has one first and one deq method to retrieve one message at a time.

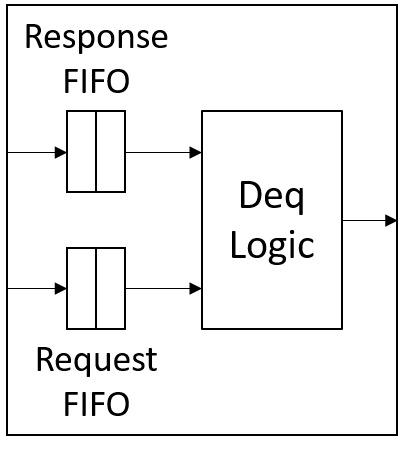

As mentioned in the class, a request should never block a response when they both sit in the same message FIFO. To ensure this point, we could implement the message FIFO using two FIFOs as shown in Figure 2. At the enqueue port, requests are all enqueued into a request FIFO, while responses are all enqueued into another response FIFO. At the dequeue port, response FIFO has priority over request FIFO, i.e. the deq method should dequeue the response FIFO as long as the response FIFO is not empty. The numeric type n in the interface definition is the size of the response/request FIFO.

|

| Figure 2: Structure of a message FIFO |

Exercise 1 (10 Points): Implement the message FIFO (mkMessageFifo module) in src/includes/MessageFifo.bsv. We provide a simple test in the unit_test/message-fifo-test folder. Use make to compile, and use ./simTb to run the test.

Message router

The message router connects all L1 D caches and the parent protocol processor. We will implement this module in src/includes/MessageRouter.bsv. It is declared as:

module mkMessageRouter(

Vector#(CoreNum, MessageGet) c2r, Vector#(CoreNum, MessagePut) r2c,

MessageGet m2r, MessagePut r2m,

Empty ifc

);The MessageGet and MessagePut interfaces are just restricted views of the MessageFifo interface, and they are defined in CacheTypes.bsv:

interface MessageGet;

method Bool hasResp;

method Bool hasReq;

method Bool notEmpty;

method CacheMemMessage first;

method Action deq;

endinterface

interface MessagePut;

method Action enq_resp(CacheMemResp d);

method Action enq_req(CacheMemReq d);

endinterfaceWe have provided the toMessageGet and toMessagePut functions to convert a MessageFifo interface to MessageGet and MessagePut interfaces. Below is an introduction to each module argument:

- c2r is the interface of the message FIFO from each L1 D cache.

- r2c is the interface of the message FIFO to each L1 D cache.

- m2r is the interface of the message FIFO from the parent protocol processor.

- r2m is the interface of the message FIFO to the parent protocol processor.

The major functionality of this module falls into two parts:

- sending messages from the parent (m2r) to the correct L1 D cache (r2c), and

- sending messages from L1 D caches (c2r) to the parent (r2m).

It should be noted that response messages have priority over request messages just like the case in message FIFO.

Exercise 2 (10 Points): Implement the mkMessageRouter module in src/includes/MessageRouter.bsv. We provide a simple test in the unit_test/message-router-test folder. Run the following to compile and run:

$ make $ ./simTb

L1 data cache

The blocking L1 D cache (without store queue) will be implemented in src/includes/DCache.bsv:

module mkDCache#(CoreID id)(MessageGet fromMem, MessagePut toMem, RefDMem refDMem, DCache ifc);Below is the introduction to each module parameter and argument:

- id is the core ID, which will be attached to every message sent to the parent protocol processor.

- fromMem is the interface of the message FIFO from parent protocol processor (or more accurately the message router), so downgrade requests and upgrade responses can be read out from this interface.

- toMem is the interface of the message FIFO to parent protocol processor, so upgrade requests and downgrade responses should be sent to this interface.

- refDMem is for debugging, and currently you do not need to worry about it.

The DCache interface returned by the module is defined in CacheTypes.bsv as follow:

interface DCache;

method Action req(MemReq r);

method ActionValue#(MemResp) resp;

endinterfaceYou may have noticed that the MemOp type (defined in MemTypes.bsv), which is the type of the op field of MemReq structure (defined in MemTypes.bsv), now have five values: Ld, St, Lr, Sc and Fence. For now you only need to handle Ld and St requests. You could add logic in the req method of the DCache interface, which reports error if it detects requests other than Ld or St.

The MemReq type also has a new field rid, which is the ID of the request used for debugging. rid is of type Bit\#(32), and should be unique for each request from the same core.

We will implement a 16-entry direct-mapped L1 D cache (the number of cache lines is defined as type CacheRows in CacheTypes.bsv). We suggest to use vector of registers to implement the cache arrays in order to assign initial values. We have also provided some useful functions in CacheTypes.bsv.

The MSI state type is defined in CacheTypes.bsv:

typedef enum {M, S, I} MSI deriving(Bits, Eq, FShow);We have made MSI type become an instance of the Ord typeclass, so we can apply comparison operator (>, <, >=, <=, etc.) on it. The order is M > S > I.

Exercise 3 (10 Points): Implement the mkDCache module in src/includes/DCache.bsv. This should be a blocking cache without store queue. We provide a simple test in the unit_test/cache-test folder. To compile and test, run

$ make $ ./simTb

Parent protocol processor

The parent protocol processor will be implemented in src/includes/PPP.bsv:

module mkPPP(MessageGet c2m, MessagePut m2c, WideMem mem, Empty ifc);Below is the introduction to each module argument:

- c2m is the interface of the message FIFO from L1 D caches (actually from the message router), and upgrade requests and downgrade responses can be read out from this interface.

- m2c is the interface of the message FIFO to L1 D caches (atually to the message router), and downgrade requests and upgrade responses should be sent to this interface.

- mem is the interface of the main memory, which we have already used in lab 7.

In the lecture, the directory in the parent protocol processor record the MSI states for every possible address. However this will take a significant amount of storage for a 32-bit address space. To reduce the amount of storage needed for the directory, we notice that we only need to track addresses that exist in L1 D caches. Specifically, we could implement the directory as follow:

Vector#(CoreNum, Vector#(CacheRows, Reg#(MSI))) childState <- replicateM(replicateM(mkReg(I)));

Vector#(CoreNum, Vector#(CacheRows, Reg#(CacheTag))) childTag <- replicateM(replicateM(mkRegU));When the parent protocol processor wants to know the approximate MSI state of address a on core i, it can first read out tag=childTag[i][getIndex(a)]. If tag does not match getTag(a), then the MSI state must be I. Otherwise the state should be childState[i][getIndex(a)]. In this way, we dramatically reduce the storage needed by the directory, but we need to maintain the childTag array when there is any change on the children states.

Another difference from the lecture is that the main memory data should be accessed using the mem interface, while the lecture just assumes a combinational read of data.

Exercise 4 (10 Points): Implement the mkPPP module in src/includes/PPP.bsv. We provide a simple test in the unit_test/ppp-test folder. Use make to compile, and use ./simTb to run the test.

This is the end of the first part of the project. On monday 27, I will release the testing of the entire memory hierarchy and the integration.

Final Presentation

Final Presentation

On December 13th from 3 PM to 5 PM, we will have final presentations for this project and some pizza at the end. We would like you to prepare a presentation no more than 10 minutes about your final project. You should talk about the following things:

- How the work is divided among the group members.

- What difficulties or bugs you have encountered, and how you solved them.

- The new things you have added (or you are still adding).